accesses since July 9, 2014

accesses since July 9, 2014

copyright notice

copyright notice link to the published version: IEEE Computer, September, 2014

link to the published version: IEEE Computer, September, 2014

accesses since July 9, 2014

accesses since July 9, 2014

Like most of you, I use Wikipedia for handy access to public domain data like timelines, dates, locations, ages, names, places, etc. And like most of you, I don’t rely on Wikipedia for much beyond that. For reliable encyclopedic entries online Britannica or even Encarta is preferred, and for everything else I have to pull the few authoritative resources from the flotilla of Google guano. That said, for a mid-altitude first pass over a hazy information landscape, Wikipedia is hard to beat.

The advantage of crowd-sourced projects is that it draws on a diversity of viewpoints. The disadvantage of crowd-sourced projects is also that it draws on a diversity of viewpoints. Not all members of crowds are equally well-informed, trustworthy, reliable, and some most decidedly don't play well with others. James Surowiecki's book The Wisdom of Crowds (Anchor, 2005), and Pattie Maes' Firefly recommender system in the mid-1990's, were both worthy subjects of study – I'd proffer that both were provocative and inspired. But both seemed to me at the time to have a fundamental flaw: a failure to appreciate that not all crowds are worth associating with and that as a group they can't be relied upon to filter out the crap. If not all people are created equal, why would one expect more of a group? Put another way, reasoning by appeal to crowds is a softer, less direct version of appeal to authority. Crowds, like landfills, may contain treasures, but the yield rate isn't encouraging.

So that's a backdrop to my discovery of Figure 1 – a product of an ideology-based edit war on Wikipedia. I discovered this Wikipedia entry on January 8, 2013 at 8am. I discovered the reversion to the pre-vandalism entry one hour later Figure 2. I use this example to illustrate a point: the big challenge with open source, crowd oriented information repositories is the vetting problem. In this case, the rhetoric was so inflammatory that the hostile intent was easy to identify. Such is not always the case. Falsehood, deception, and lying are much harder to spot than ridicule, defamation and treachery, but far more insidious.

Figure 1: Wiki-vandalism; January 8, 2013, 8am

Figure 2: Reversion; January 8, 2013, 9am

About twenty years ago and with the advent of Common Gateway Interface the entire Internet community became obsessed with Web interactivity. My initial foray evolved from the ACM Electronic Communities Project ( http://www.acm.org/ccp/reports/ccp_rpt_5-30-97.html ) that I created and directed. I was also serving on the Publications Board chaired by Peter Denning at the time. Peter frequently spoke of the importance of member-engagement to the well-being of professional societies. I thought that was inspired and used ECP as the rubric to develop engaging technologies for ACM membership, including online Black Jack, an online, individual perpetual events calendar, a volunteer hotline to attract member-volunteers to activities, to name but a few, all of which were prototyped and deployed on the ACM's website.

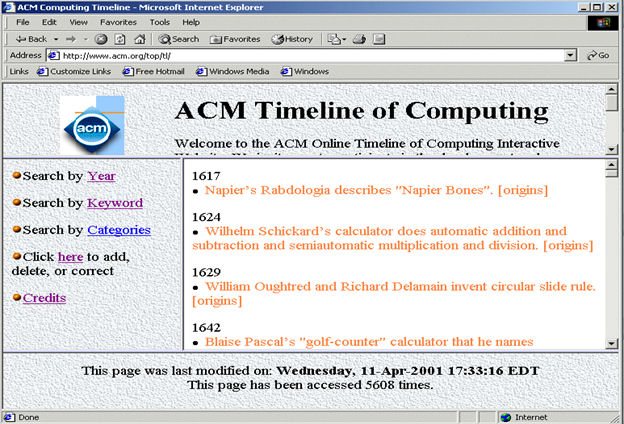

One of my ideas was the ACM Interactive Timeline of Computing. Many of you may remember the hardcopy 7-panel foldout that adorned computing department hallways and classrooms for many years. According to the minutes of the ACM Membership Board that I found online, the original timeline dated back at least to 1992 and owed its existence to Marc Rettig. It occurred to me that in a fast moving field like computing, printing milestones on posters was inherently retro dorsal and better suited for the constant and invariable like great works of literature, ruins of the ancient world, dynastic successions of monarchies, and major news events. So, I decided to develop an interactive website that allowed the computing community (aka the computing “crowd”) to continuously edit and update the entries.

INTERACTIVITIES

I built upon an idea that I hatched in the mid-1990's that ultimately surfaced as a digital ballot box (DBB) for student prizes (CACM, October, 1996, pp. 19-24). That evolved into the “Email: Good, Bad and Ugly” (EGBU) interactive Website. In the latter I opined about these three aspects of email, deployed an interactive website so that others might extend my observations in 1996, and published my observations in my CACM column (April, 1997, pp. 11-15). Although this site was mostly offered for amusement, I saw potential in using web interactivities to build knowledge bases – as long as the problem of vetting could be addressed. I didn't know it at the time but Ward Cunningham had already staked out the claim for interactive wikis with the WikiWikiWeb in 1995. Ward's software was far more sophisticated than mine, but he was more interested in the technology of site construction than value-adding content vetting. The vetting problem finally went critical for him with the proliferation of extreme programming posts ( https://en.wikipedia.org/wiki/History_of_wikis#Growth_and_innovations_in_WikiWikiWeb_from_1995_to_2000 ) about the time that I launched EGBU.

Tony and I sought to remedy the major “known known” vetting problem in the design of the Interactive Timeline of Computing. We split the function into two parts: a formal interactive peer review system for the domain knowledge experts, and an informal balloting system for the users. The former was to provide primary quality control, while the latter was our feedback mechanism for anomalies that would help flag suspicious and dubious entries. The first prototype was launched over the summer months in 2000, followed in close succession by announcements in CACM in October and November of that year, and the formal release announced at ACM 1 in San Jose in March, 2001 (Fig. 3). The entire prototype was given to the ACM in 2001 - without permanent effect, I might add. I tried to convince the Computer Society to develop something similar a few years later with equal success. By then the notion of wikis, derived from the original Cunningham technology platform, had gone viral. To this day, the wikis I am familiar with all lack an adequate peer-review vetting process.

Figure 1: the Interactive Timeline of Computing in 2001

This is the backdrop to my January 8, 2013 discovery on Wikipedia, which still hadn't harnessed the vetting problem. For amusement I ran the following experiment to find out how their review process worked. In order to appreciate the underlying logic of my experiment, you have to take me at my word that I am in the best possible position to (a) to spell my name, and (b) understand the point of my publications.

I created a Wikipedia account and immediately tried to correct a misspelling of my name. Apparent success was shortly dashed with a reversion notice. I tried again with the same result. Then I noticed that someone had linked to some of my work but the URL was wrong. I corrected it. Reversion! Then I noticed that someone had mentioned my research in an area (correctly), but referenced the wrong publication. Corrected? Nope, reverted. I limited myself to suggested corrections/additions/rewordings only on research areas with which I was very familiar. Reversion after reversion. That was my introduction to the world of wacky wickis. My opinion was subsequently solicited by email from a purported product manager for the Wikimedia Foundation, but the line went dead when I attempted to correspond. I want to emphasize here that Wikipedia has myriad rules on editing with which I am largely unfamiliar, so transgressions were possible. However, there is no question that the officious mechanisms in place are inconsistent with legitimate peer review. This is not a particular fault of Wikipedia but rather of wikis as such and in general.

I repeat: the quintessential problem for online repositories is the absence of an adequate vetting process that produces lightweight content. Instead of making a distinction between a jury of domain experts on the one hand and an approval voting system to flag anomalies, Wikipedia combines both into crowdsourcing. Wiki wars (or edit wars) result when mini-crowds become mobs and empirical truth degenerates into opinion and ideology. I came away from my limited experiment with absolutely no idea what, if any, standards are used to determine whether edits are retained and which are undone through reversion. That is the symptom of the problem. The problem is that reliability is a priori unquantifiable. I can say with conviction that the principle of allowing edits until some unnamed authority decides to revert is an absolutely idiotic way to create a reliable online resource and encourages the type of online vandalism depicted in Figure 1.

So it appears that the evolution of Cunningham's innovative wiki technology has far outstripped our confidence. Claims to the contrary notwithstanding, Wikipedia remains in my view only reliable when it comes to uncontroversial and incontrovertible facts. I submit that to domain knowledge experts who are actual authorities on a subject, articles are not verifiable and neutral and I suspect in most cases if the topic is narrow enough the authors may be handily identified from the narrative. That is to say, that consensus is not the appropriate litmus test when accuracy is required and is not a good tool for dispute resolution, and no responsibility will ever be attributable to an anonymous source. In its defense, Wikipedia does not claim to be authoritative, but only collaborative.

Wikipedia's enormous success has deservedly attracted the interests of social scientists who study the effects of trolling, sock puppeting, edit wars, power plays, etc. and Wikipedia's own policies on the editorial results. An exemplar that comes to mind is Roy Rosenzweig's Can History be Open Source? Wikipedia and the Future of the Past ( http://chnm.gmu.edu/essays-on-history-new-media/essays/?essayid=42 ). This topic is ripe for PhD dissertations and has no doubt found acceptability in promotion and tenure decisions. I have nothing to contribute here other than to acknowledge that such studies are worthy of our intention lest we be lulled into unjustified acceptance of its content. I note as an aside that my concerns about the vetting problem were apparently shared by Wikipedia co-founder Larry Sanger and led to his creation of Citizendium ( http://en.citizendium.org/ ) that required some level of peer review – the same approach that we took with the Timeline nearly fifteen years ago.

From a personal perspective, I really wish I had known about Ward Cunningham's wiki software. That would have saved us a lot of work reinventing wheels. From the wiki perspective, perhaps the lesson to be learned is that unrestrained wikis are intrinsically unreliable, that truth is never a product of consensus, and that peer review has a disquieting effect on opiners of any stripe – all of which follows from my general attitude about crowds. I should also mention that the IEEE took a giant swerve around the vetting issue and simply digitized their timeline for online access ( http://www.ieeeghn.org/wiki/images/1/19/Timeline.pdf ) which, along with Wikipedia, also has its purpose.

Acknowledgements: This gives me an opportunity to recognize former colleagues and students who worked with me on many of these early interactivities, especially Doug Blank, Dan Berleant, Jiang Hong Song, and Pawel Wolinski.